详解模块化MEV,如何实现公平的交易排序?

作者:Maven11

编译:Luffy,Foresight News

在本系列论文的前两部分中,我们主要关注拆分堆栈时出现的技术问题,以及模块化世界的需要进行的改进。我们已经介绍了许多工作进展,以解决跨域设置中自然出现的问题。然而,在该系列的最后部分,我们希望更多地关注用户体验。我们想研究模块化、定制和专业化如何帮助创建更好的应用程序。本系列的最后一章将着眼于模块化中令人兴奋且独特的创造性和可能性,供开发人员创建具备 Web3 可验证性的 Web2 用户体验。

构建模块化背后的原因不应该只是为了迎合叙述,也不只是为了模块化,而是因为它使我们能够构建更好、更高效和更可定制的应用程序。在构建模块化和专用系统时,会出现许多独特功能。其中一些是显而易见的,而另一些则不那么明显。因此,我们的目标是提供你所不知道的模块化系统能力的概述,例如可扩展性。

我们认为,模块化为开发人员提供的能力之一是能够构建高度可定制的专业应用程序,为终端用户带来更好的体验。我们之前已经讨论过设置规则或重新排序交易执行顺序的能力。

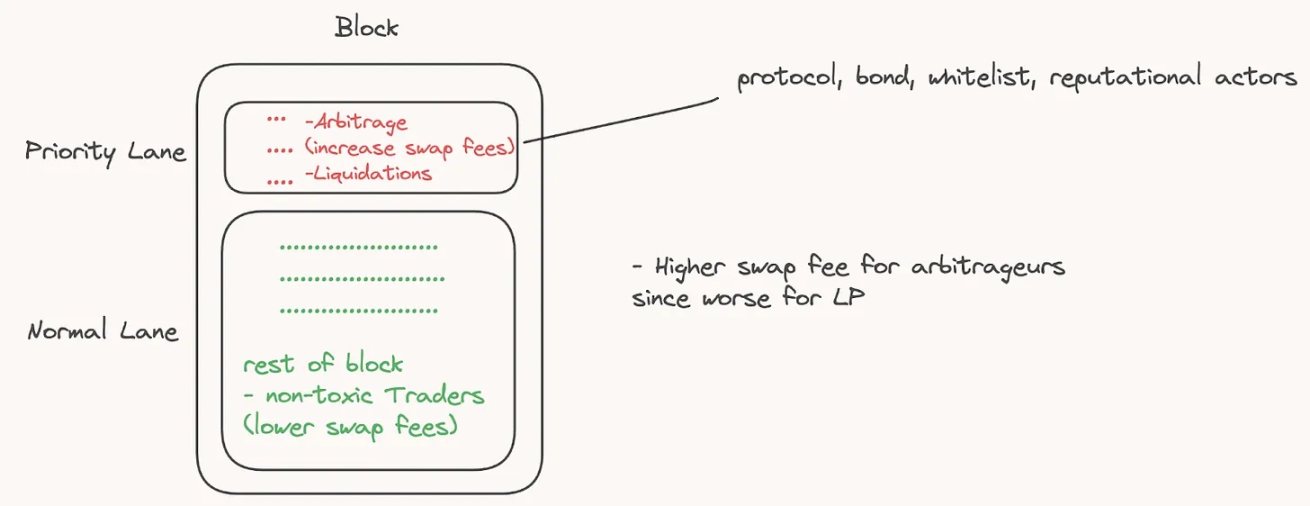

可验证的排序规则(以下简称 VSR)是控制排序提供的有趣机会之一,特别是对于有兴趣在执行方面构建「更公平」交易系统的开发人员。显然,流动性提供者的损失与再平衡(LVR)的关系超出了本文的范围,因此我们将避免触及太多这方面的知识。请记住,我们将要解释的设置主要针对 AMM 而不是订单簿模型 。此外,CLOB(甚至 CEX)也将从利用适合其特定设置的可验证排序规则中受益匪浅。在链下设置中,显然需要一些由加密经济安全性支持的零知识或乐观执行的概念。

当我们考虑到大多数散户尚未(或不太可能)采用保护方法这一事实时,VSR 特别有趣。大多数钱包 /DEX 也没有实现私有内存池、RPC 或类似方法。大多数交易都是直接通过前端提交的(无论是聚合器还是 DEX 的前端)。因此,除非应用程序直接干扰其流程和订单的处理方式,否则终端用户获得的执行效果可能都不太理想。

当我们考虑交易供应链排序所在时,VSR 的作用就显而易见了。它位于专业参与者排序(或包含)交易的地方,通常基于一些拍卖或基本费用。这个排序非常重要,它决定了执行哪些交易以及何时执行。本质上,拥有排序权的人拥有提取 MEV 的能力,通常以优先费用(或小费)的形式获取。

因此,编写有关如何处理排序的规则以便为终端用户提供更公平的交易执行(在 DEX 设置中)可能会很有趣。但是,如果你正在构建通用网络,则应该尽量避免遵守此类规则。

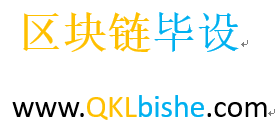

此外,有一些 MEV 很重要,如套利、清算等。一个想法是在区块顶部建立一条「高速公路」通道,专门针对白名单上的套利者和清算人,他们支付更高的费用并与协议分享部分收入。

在论文《通过可验证排序规则进行可信去中心化交易所设计》中,Matheus V.、X. Ferreira 和 David C. Parkes 提出了一个模型,其中区块的排序器受到一系列执行排序规则的约束(并且这些约束是可验证的)。在不遵守设定规则的情况下,观察者可以生成故障证明(或者由于约束在数学上是可验证的,你也可以想象一个具有这些约束的 ZK 电路,它使用 ZKP 作为有效性证明)。主要思想本质上是为终端用户(交易者)提供执行价格保证。这种保证确保交易的执行价格与区块中唯一的交易一样好(显然,如果我们假设基于先到先得的买入 / 卖出 / 买入 / 卖出排序,则此处涉及一定程度的延迟)。论文中提案的基本思想是,如果它们以比区块顶部可用的价格更好的价格执行,这些排序规则将限制构建者(在 PBS 场景中)或排序器仅将交易包含在相同的方向(比如卖 / 卖)。此外,如果存在这样的情况,即你在一系列购买结束时进行了卖出,那么卖出将不会被执行(例如,买,买,买,卖),这可能表明搜索者(或构建者 / 排序器)利用这些购买来推动价格向有利于他们的方向发展。这本质上意味着协议规则保证用户不会被用来为其他人提供更好的价格(即 MEV),或者由于优先费用而导致价格下滑。显然,这里规则的缺陷(在卖出数量多于买入数量的情况下,反之亦然)是,你可能会得到相对较差的长尾价格。

对于一般的智能合约平台而言,将这些规则作为纯粹的链上构造规则几乎是不可能的,因为你无法控制执行和排序。与此同时,你还与许多其他人竞争,因此试图强制那些处于区块顶层的人支付优先费将是不必要的昂贵。模块化设置的功能之一是,它允许应用程序开发人员自定义其执行环境应如何运行。无论是排序规则、使用不同的虚拟机还是对现有虚拟机进行自定义更改(例如添加新的操作码或更改 Gas 限制),实际上都取决于开发人员,取决于他们的产品。

在一个 Rollup 使用数据可用性、共识层以及流动性结算层的情况下,可能的设置如下所示:

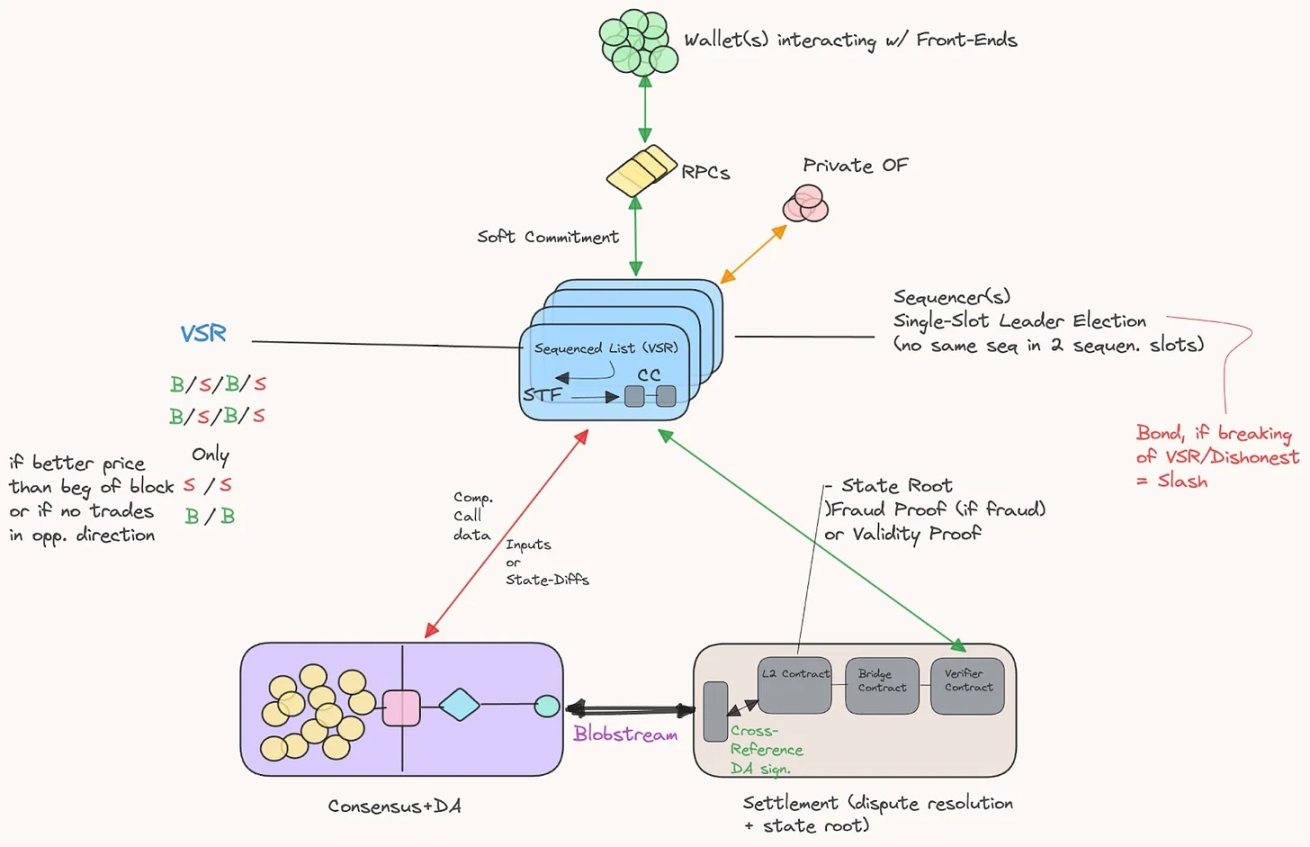

另一个可能的想法是交易分割。设想有一个交易池,如何执行大订单交易(这会导致大量滑点)?如果此交易跨连续区块执行(或者如果符合 VSR,则在区块最后执行),这对终端用户公平吗?

如果终端用户关心延迟,那么该用户可能不希望将他的订单被拆分。然而,这种情况不太常见,并且针对较大订单的交易拆分进行优化可能会为绝大多数用户带来更高效的执行。不管怎样,一个担心是 MEV 搜索者可能会意识到这些连续交易,并试图将自己的交易定位在上述交易者之前或之后。然而,由于一系列区块上的小规模分割交易,提取的 MEV 总价值可能会小很多。

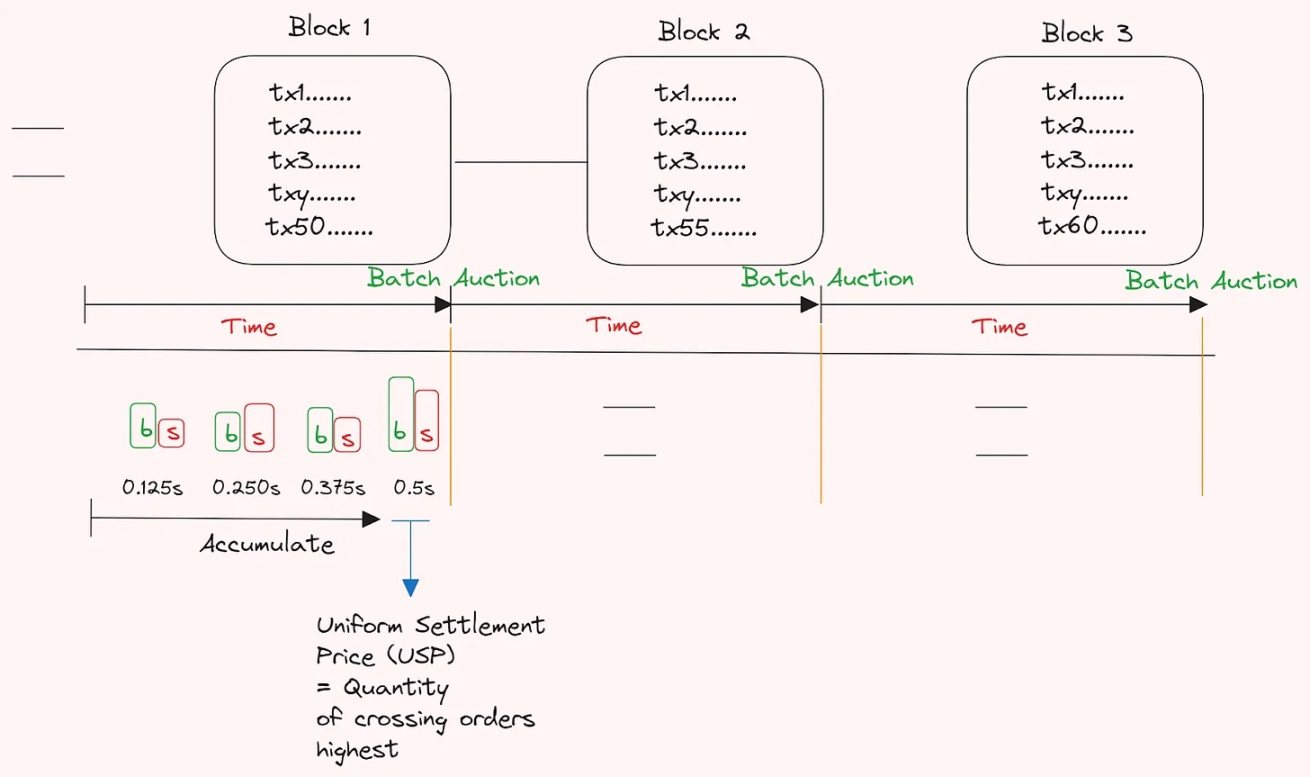

我们之前在帖子中提到的另一个有趣的想法是使用由传奇人士 Eric Budish 与其同事倡导的频繁批量拍卖 (FBA) ,以批量拍卖方式而不是串行方式处理交易。这是为了帮助发现需求重合(CoW)并将套利机会融入市场机制设计中。这也有助于「对抗」连续区块构建中的延迟游戏(或串行区块中的优先费用战)。感谢 Michael Jordan (DBA) 让我们注意到这篇论文,并感谢他为缓和 Latency Roast(所做的工作。将其作为 Rollup 的分叉选择和排序规则的一部分来实现也是开发人员可以使用的一个有趣的设置,我们已经看到它在过去的一年中的显着吸引力,尤其是对 Penumbra 和 CoWSwap。一种可能的设置如下所示:

在这种设置中,不存在先到先得或优先 Gas 费战争,而是在每个区块之间的时间内根据累积订单进行区块结束批量拍卖。

一般来说,在大部分交易已转移到非托管的「链上」世界,FBA 可能是「真实」价格发现的更有效方式之一,具体取决于区块时间。利用 FBA 还意味着,由于所有大宗订单都是批量的,并且在拍卖结束之前不会透露(假设有一些加密设置),因此抢先交易会大大减少。统一的结算价格是此处的关键,因为重新排序交易是没有意义的。

还需要指出的是,早在 2018 年,Ethresear.ch 论坛上就讨论了类似于我们刚刚介绍的设计(请参阅此处)。在帖子中,他们提到了两篇论文,在 Plasma 上提供了一种批量拍卖机制(有点像现代 Rollup 的前传),其中每个批次接受以某个最高限制价格购买其他 ERC20 代币的订单。这些订单是在一定时间间隔内收集的,并为所有代币交易对提供统一的结算价格。该模型背后的总体思想是,它将有助于消除流行的 AMM 中常见的抢先交易现象。

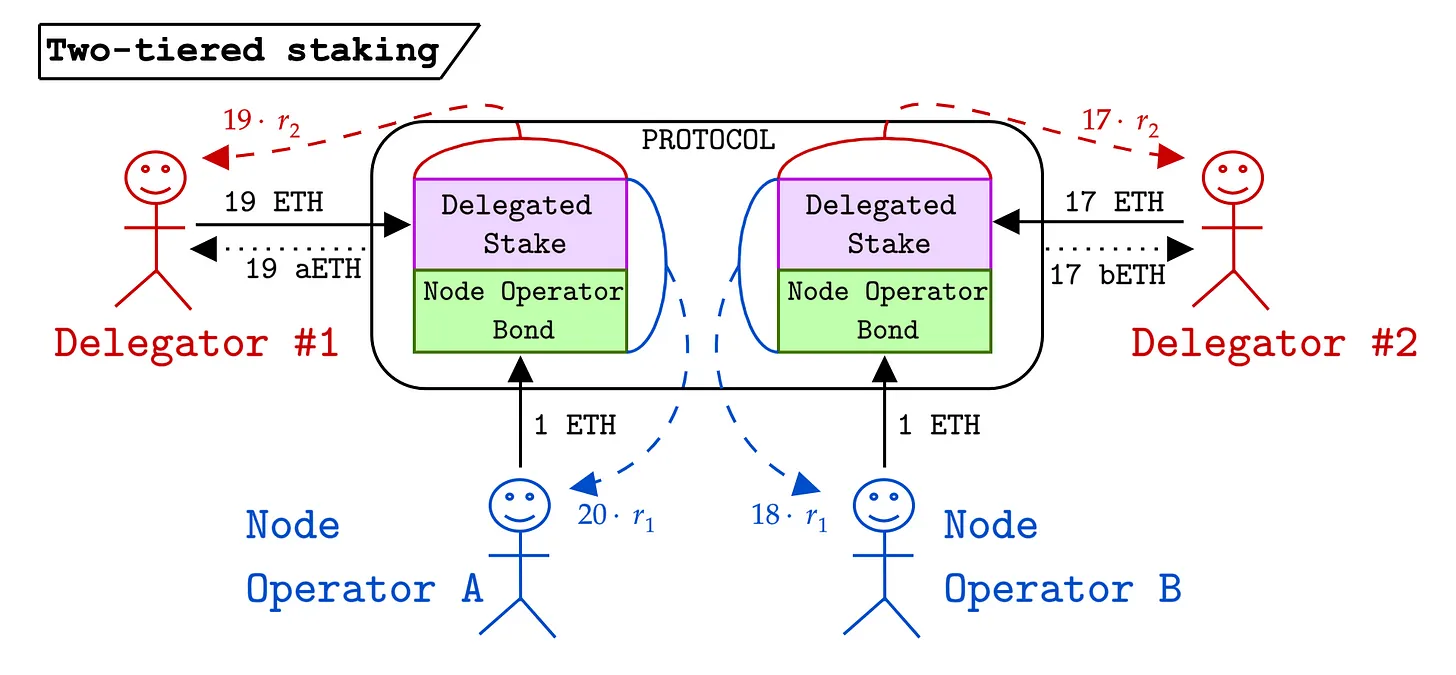

另一个需要注意的重要事项是,在这些设置中,排序器可能需要一些激励来执行(和强制执行)上述规则。这一点经常被忽视,但区块链网络的大部分基础设施都是由专业公司运营的,其成本与普通的家庭参与者完全不同。一般来说,激励是安全基础设施实施的重要组成部分。在激励措施与所执行的规则一致的情况下,排序器和构建者也更有可能做出更大的努力。这意味着这些设置也应该有一个活跃的市场。显然,这类市场正在趋于中心化,因为专业化的资金成本可能很高。因此,最聪明(也最富有)的人可能会整合并专业化,以获取尽可能多的价值。在这里,排他性订单流对于某些参与者可能是膝盖上的箭头,则导致中心化增加。一般的基准费用可能就足够了,但它并不能真正推排序参与者走向专业化。因此,你可能希望通过适合你的特定情况的激励机制引入一些让交易者对结果满意的概念。

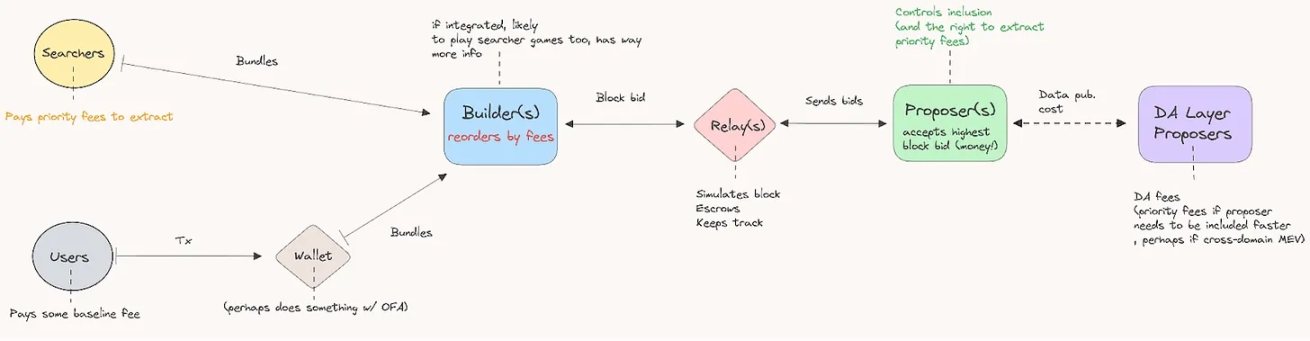

这对大多数人来说都很清楚,但在讨论 Rollup 级别的排序时仍然需要提及。如果你能够控制排序,则可以更轻松地「提取」协议的价值。 这是因为你控制着重新排序交易的权力,这通常基于大多数 L1 上的优先费用(MEV-boost-esque 设置)。它为你提供了链上提取价值的复杂参与者支付的优先费用。这些参与者通常愿意支付相当大的金额(直到它不再能够提供价值)。然而,目前大多数 Rollup 主要采用先到先得的机制。大多数 MEV 提取都是通过延迟战争进行的,这给 Rollup 基础设施带来了严重压力。由于上述原因,我们可能会看到越来越多的 Rollup 开始实施具有优先费用概念的排序结构(例如 Arbitrum 的时间增强机制)。

我们喜欢的另一个例子是 Uniswap。目前,Uniswap 作为一种协议「创建」了大量低效率。这些低效率的行为被寻求提取 MEV(套利,以流动性提供者的利益为代价)的参与者所利用。与此同时,这些参与者为提取价值而支付了大量费用,但这些价值都没有落入 Uniswap 协议的手中,也没有落入其代币持有者的手中。相反,这种提取的价值的很大一部分是通过 MEV-Boost 向以太坊提议者(验证者)支付优先费,以获得在某个时刻包含在允许捕获价值的区块中的权利。因此,虽然 Uniswap 订单流存在大量 MEV 机会,但没有一个被 Uniswap 捕获。

如果 Uniswap 能够控制协议内的排序(以及从搜索者那里提取优先费用的能力),它可以实现商业化, 甚至可能将其中一些利润支付给代币持有者、流动性提供者或其他人。随着 Uniswap 的变化(例如 UniswapX 等)转向链下执行(以及以太坊作为结算层),这种机制看起来越来越有可能。

如果我们假设一个拥有部分 PBS 机制的 Rollup,订单流和商业化流程可能如下:

由此,Rollup 排序者、提议者的商业化可能遵循以下公式:

发行量 (PoS)+ 费用收入 (+priority)-DA、state pub、storage 的成本

查看当前在以太坊上提取了多少价值(尤其是套利)的好方法可以在 Mevboost.pics 上找到,它很好地概述了从低效率中实际可以提取多少价值。

此外,将优先费 Gas 战争与链外结构分离,可以将 MEV 提取隔离到执行环境中,从而有助于遏制供应链中断。然而,考虑到如果领导者选举发生在 Rollup 上,则大多数 MEV 将在 Rollup 上提取,这给底层结构留下很少的空间,除非 DA 层包含、结算层的优先费用来自流动性整合或其他的规模经济。

需要澄清的是,其中许多结构可以作为纯粹的链下结构发挥作用,无需任何验证桥或强大的安全保证。然而,必须在那里做一些权衡。我们开始看到更多这样的东西突然出现,无论是现有的还是隐形的。我想要指出的一点是,模块化设置并不一定意味着是 Rollup。

上述排序规则代表了一个示例,其中微调基础设施可以大幅改善构建在之上的应用程序。